How the Karen Read Trial Compares to Generative AI in Education

A post for overlapping true crime and EdTech circles.

In May, I attended an event hosted by a tech giant selling its vision of generative AI in K-12 education. As you may imagine, there were big promises and a dearth of peer-reviewed supporting evidence. I was delighted to see someone I knew from Maine there. I asked him if he was following the ongoing Karen Read murder trial in Massachusetts.1 He said he was. I told him I felt like Read’s defense attorneys at the event, reacting to the prosecution’s (or on that day, the tech giant’s) outlandish claims and weak evidence.

Read’s second trial this spring was so interesting that it still occupies space in my brain. As readers of my Substack know, so does generative AI in education. I have noticed many connections between the two seemingly unrelated topics.

Trigger Warnings: This post contains language about murder, violence, suicide, and, unbelievably, obscene words that start with the letters C and R. Also, there are references to body anatomy comments about Read’s Crohn’s Disease. Additionally, this post includes generative AI-generated images of historical figures from marginalized and oppressed communities.

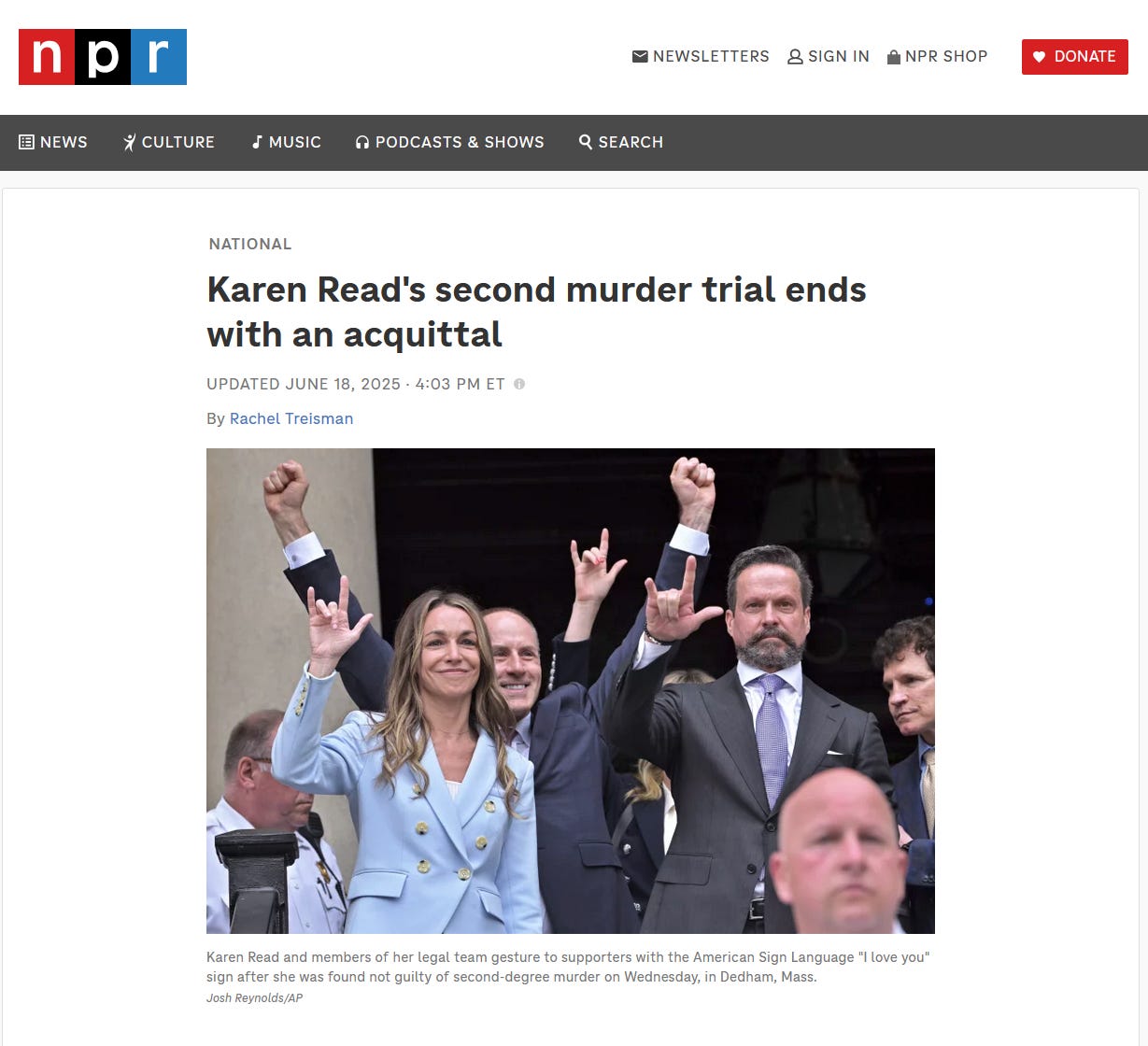

While I won’t give a complete rundown of the case, here’s a basic overview for the uninitiated: On January 29, 2022, Boston police officer John O’Keefe’s body was found on the front lawn of another Boston police officer’s home in Canton, MA. O’Keefe’s girlfriend, Karen Read, was arrested and charged with murder for allegedly hitting him with her car. A 2024 trial ended in a hung jury. The second trial in June ended with her acquittal on all charges except operating a vehicle under the influence.

In my humble opinion, Read is innocent because for her to be guilty, all of these implausible things would have to be true:

Almost ten people leaving a house after O’Keefe was allegedly struck missed a dying 6’2 man on the ground in a small front yard.

A dog wasn’t the reason for the bite and scratch marks found on O’Keefe’s arm.

Getting hit by a car neither broke nor bruised O’Keefe’s arm nor injured his lower body, nor left any of his DNA on the taillight pieces found at the scene.2

Dighton, MA police officer Nicholas Barros (who was neither Canton PD nor Boston PD) was wrong about the state of the taillight on Read’s car.

Snowplow driver Brian “Lucky” Loughran was wrong about not seeing O’Keefe’s body on the front lawn of a house he had been to many times.

The crash reconstructionists the FBI hired, who found O’Keefe was not hit by a car, were wrong.

With that established, let’s examine all the ways the Karen Read case is eerily similar to generative AI in education.

Improper Connections Between the Lead Investigator and Witnesses = Improper Connections Promoting Generative AI in Education

Read’s defense team discovered improper connections between the officers investigating the crime and both witnesses and potential suspects. For example, the lead investigator, Former3 State Trooper Michael Proctor’s sister, Courtney, texted him that she heard from her friend Julie Albert, "When this is all over, she wants to get you a thank you gift." Proctor’s response was not, “That would be inappropriate.” It was “Get Elizabeth one,” referring to his wife.

Why would the gift be inappropriate? Julie Albert is married to the brother of Brian Albert, the police officer on whose front lawn O’Keefe was found. Additionally, an investigation found that Proctor drank on the job with Albert’s brother. Proctor clearly had connections to the family and should have recused himself from the case immediately.

These inappropriate connections are similar to entities that should be critiquing big tech companies in light of their claims about generative AI, but are instead partnering with them. For example:

“Common Sense Media… has received funding from OpenAI—the organization wouldn’t say how much—to produce educational materials about AI for teachers and families,” as documented by Vauhini Vara in Bloomberg.

Vara also shared that, “Microsoft announced partnerships for AI training with the biggest teachers’ unions in the US; Google and OpenAI are working with the unions too.”

The American Federation of Teachers is partnering with Microsoft, OpenAI, and Anthropic to promote the use of generative AI among teachers. The AFT should be zealously defending children and teachers, not partnering with tech giants.

The state of Oregon is paying NVIDIA $10 million to promote generative AI to students and teachers. You read that right. Taxpayer money is going to a tech giant to promote its product.

Miami-Dade County Public Schools and Albuquerque Public Schools are promoting Gemini for Google for Education. Teachers in these districts spent their time shooting commercials for Google for Education.

Examine the expressions on the students’ faces in the YouTube thumbnails. What do you notice? What do you wonder? Do the students look interested or disinterested?

Former Trooper Proctor’s Offensive Text Messages = Offensive Generative AI Outputs and Practices

Former Trooper Procter sent friends, colleagues, and supervisors obscene text messages regarding this case. Before proceeding, please note the trigger warnings provided above. You’ve been warned.

The text messages included:

He referred to Read as the R word.

When he was going through Read’s phone, he texted a group chat, “No nudes so far.”

Proctor texted offensive things about Read’s anatomy related to her suffering from Crohn’s Disease.

In a text to his sister, Proctor wrote, “Hopefully she kills herself.”

While it would be challenging to match Proctor in terms of offensiveness and cruelty, we have seen some examples from the generative AI industry that are at least comparable.

LLMs have generated text suggesting self-harm. The New York Times reported that Adam Raine killed himself after a lot of engagement with ChatGPT, which generated text about suicide methods.

Generative AI is known for generating text and images that amplify racism and misogyny.

Generative AI companies are mimicking historical figures, and the results are offensive. I documented this when I wrote about SchoolAI’s Anne Frank chatbot.

The previously mentioned Vauhini Vara Bloomberg article shared an example where a John Brown chatbot, “insisted, as Brown, that violence is never the answer.”

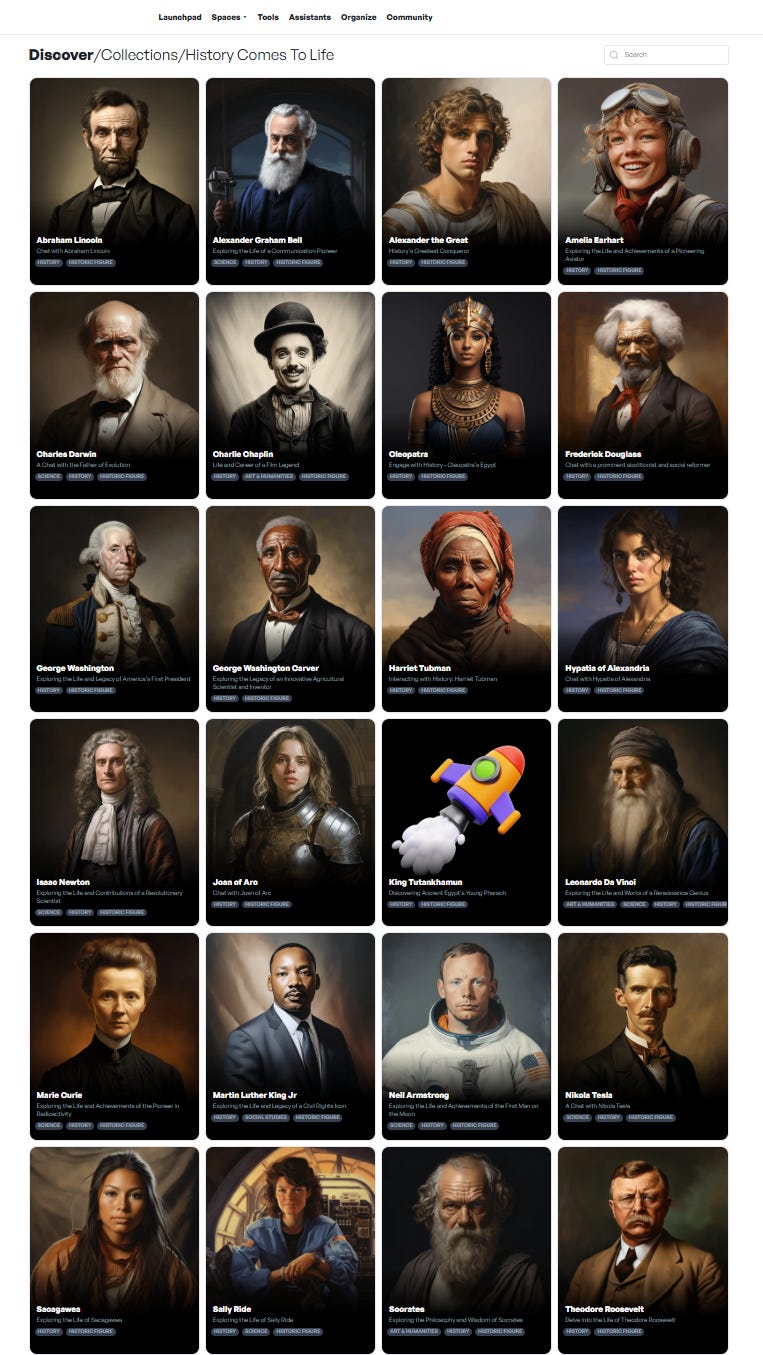

This is SchoolAI’s History Comes to Life Collection.

What do you notice? What do you wonder?

I notice an enslaver. I notice BIPOC historical figures, one of whom is Harriet Tubman. Does a “Harriet Tubman” chatbot do her life justice?

The Whistleblowers: Officer Barros and Lucky Loughran = Gebru, Mitchell, and Others

Two defense witnesses served as whistleblowers. They testified, even though what they saw did not fit the prosecution's narrative that Read murdered O’Keefe. Dighton, MA police officer Nicholas Barros saw the taillight on Read’s Lexus before it was entered into evidence. The damage he saw was much less than the damage to the taillight when it was entered into evidence. The damage Barros attested to is attributable to Read backing her car into O’Keefe’s car when she left his house to look for him.

Brian “Lucky” Loughran testified that when he plowed the street of the house where O’Keefe’s body was found, he did not see him on the ground, even though he would have to be there if Read had hit him with her car.

Barros and Loughran are similar to Timnit Gebru and Margaret Mitchell, who testified to the harms and limits of generative AI when they co-authored the Stochastic Parrots paper while employed by Google. Calling Gebru and Mitchell “witnesses” does not do justice to their contributions to our understanding of generative AI. Don’t worry, their names will appear again in the section of this post dedicated to the defense’s expert witnesses.

Other generative AI whistleblowers include Microsoft engineer Shane Jones, who wrote to then Federal Trade Commission Chair Lina Khan and Microsoft’s board of directors to warn that Copilot was not safe for public use. Additionally, former OpenAI researcher Suchir Balaji warned that the company violates copyright law before his death.

The Prosecution’s Expert Witnesses = Generative AI Promoters Who Should Know Better

The prosecution used expert witnesses who made claims about the alleged collision that were backed by little more than a video of a Lexus with blue paint on it reversing at two miles per hour, while a man with a doctorate, dressed up as the victim, subtly moved his arm to collide with it.

That expert was Dr. Judson Welcher, who, like those promoting generative AI in education, argued a case with flimsy evidence. Defense Attorney Alan Jackson called the blue paint test “some ridiculous blue paint kindergarten project” during his closing argument. Welcher was unique in his pomposity. Two other Welcher trial highlights were:

Wecher cited an article that said 61% of pedestrian motor vehicle collision injuries are head injuries. The study is almost fifty years old. It’s from 1979, before SUVs, which tend to cause lower-body injuries, had a considerable share of the domestic auto market.

Welcher proclaimed, “They didn’t actually X-ray, from the records I’ve seen, either his [O’Keefe’s] hand or his arm.” Oh, but they did. Oops.

Welcher reminds me of Professor Ethan Mollick, who promotes the use of generative AI in education.

Brian Merchant wrote about Mollick’s excitement about GPT-5: “Ethan Mollick’s breathless endorsement of GPT-5 (“GPT-5: It just does stuff”) already looks like an ad for New Coke.”

Mollick said that instructors [teachers] are “becoming coders now” because of generative AI. That is similar to “They didn’t actually X-ray.” Teachers are teachers. Not coders.

Mollick wrote in his book that, “We have invented technologies, from axes to helicopters, that boost our physical capabilities; and others, like spreadsheets, that automate complex tasks; but we have never built a generally applicable technology that can boost our intelligence.” Technology Ethicist and Professor Ariel Guersenzvaig wrote of this, “This is so demonstrably and obviously false that it’s poignant,” as part of a fantastic analysis of this quote on LinkedIn.

The Defense’s Expert Witnesses = The Academics Waving the Red Flags

Forensic Pathologist Dr. Marie Russell provided compelling testimony about the dog bites on O’Keefe’s arm. She also explained how Read’s statements, wondering if she hit O’Keefe, were an acute grief reaction, which is common for the loved ones of people who die suddenly.

Dr. Elizabeth Laposata explained that hypothermia did not play a role in O’Keefe’s death, which raised questions about his being incapacitated outdoors when the prosecution said he was. Dr. Laposta skillfully parried prosecution questions and used them as an opportunity to emphasize that a car never hit O’Keefe.

The forensic experts from ARCCA that the FBI hired, Dr. Dan Wolfe and Dr. Andrew Rentschler, credibly testified that, based on their reconstructions, O’Keefe’s injuries were not from a car.

These credentialed experts and their sober testimony remind me of the academics waving red flags about applying generative AI to tasks that require accuracy, such as education. They include authors of the Stochastic Parrots paper, such as Drs. Timnit Gebru, Margaret Mitchell, and Emily M. Bender. I wrote about them and others in this post.

Teachers: Follow These Experts To Learn AI

Before using AI in K-12 classrooms, teachers, administrators, and district leaders should understand what AI is and is not. Implementing AI apps without a solid foundational understanding does not serve students. But who to turn to for critical analysis of AI instead of hype?

Special Prosecutor Hank Brennan = OpenAI CEO Sam Altman

As you might expect, a special prosecutor who stepped up to prosecute a woman whom scientists hired by the FBI considered innocent said some odd things at the trial.

Hank Brennan:

Employed misogyny and ageism when asking defense expert, Dr. Marie Russell, “[Do] you have any memory issues?” The question was so offensive that Russell couldn’t help but audibly gasp.

Replied to Brian “Lucky” Loughran referencing his wife’s death with, “Sorry for your loss, but you are aware during the prosecution’s case that there has been pressure on you to testify in a certain way. Isn’t that fair to say?” Sorry for your loss, but! It’s hard to say which has less empathy and compassion, Hank Brennan or generative AI.

Insinuated that Dr. Andrew Rentschler was biased because the defense attorneys treated him to a ham sandwich and he laughed at their jokes. Can you imagine being on the jury and having your time wasted with that line of questioning?

Released a statement after the verdict that said, “I concluded that the evidence led to one person, and only one person. Neither the closed federal investigation [it’s not known if it is actually closed] nor my independent review led me to identify any other possible suspect or person responsible for the death of John O’Keefe.” Statements like these, made after acquittals, undermine confidence in the criminal justice system. An officer of the court like Brennan should respect a jury verdict, not undermine it. Why even have a jury trial if Hank Brennan thinks you’re guilty? Even Michael Morrissey, the district attorney who hired Brennan, had the good sense to say only, “The jury has spoken.” For more on how Brennan’s statement undermines justice, please watch this video from attorney Martin E. Radner.

Brennan’s penchant for saying interesting things is reminiscent of OpenAI CEO Sam Altman. Here is just a brief sample:

Responding to legitimate criticisms raised by The Stochastic Parrots paper by Tweeting, “i am a stochastic parrot, an so r u”

Tweeting “her” after a ChatGPT 4-o demo that sounded like Scarlett Johansson’s voice, even though the actor denied consent to invoke her voice.

This whining tweet: “>be me

>grind for a decade trying to help make superintelligence to cure cancer or whatever

>mostly no one cares for first 7.5 years, then for 2.5 years everyone hates you for everything

>wake up one day to hundreds of messages: “look i made you into a twink ghibli style haha”Tweeting an image of the Death Star before the rollout of the underwhelming GPT-5.

Writing a blog post saying that a ChatGPT-generated essay uses “0.000085 gallons of water, roughly one-fifteenth of a teaspoon” despite offering no evidence to support the claim.

Jennifer McCabe = ???

Jennifer McCabe was a prosecution witness who was especially disliked by people who wanted to see Karen Read acquitted. She was adamant that Read struck O’Keefe even though she didn’t see it happen. I have said too many controversial things in this post to share my thoughts about the promoter of generative AI in education who equates to McCabe. Maybe if you Venmo me enough to buy a fancy drink at my favorite coffee shop, we can meet virtually and I’ll tell you EVERYTHING!

Justice for John O’Keefe

While the comparisons between the players in the Read trial and figures in generative AI in education are interesting, the most important thing is getting justice for John O’Keefe. If this post piques anyone's interest in this case and the pursuit of the person or persons responsible for O’Keefe’s death, that’s a win.

Further, Karen Read was tried twice for a murder she obviously did not commit. There needs to be accountability for those in the Norfolk District Attorney’s Office, the Massachusetts State Police, and the Canton Police Department who facilitated this.

Justice for John O’Keefe.

Let’s Talk

What do you think? What do you notice about the efforts to sell generative AI to K-12 education? What connections are you making? Comment or ask a question below. Connect with me on BlueSky: tommullaney.bsky.social.

Does your school or district need a tech-forward educator who critically evaluates generative AI? I would love to work with you. Reach out on BlueSky or email mistermullaney@gmail.com.

AI Disclosure:

I wrote this post without using generative AI. That means:

I developed the idea for the post without using generative AI.

I wrote an outline for this post without the assistance of generative AI.

I wrote the post using the outline without using generative AI.

I edited this post without the assistance of any generative AI. I used Grammarly to help edit the post. I have Grammarly GO turned off.

Maine, Massachusetts, close enough.

Taillight pieces were found hours after O’Keefe was discovered. None were found when his body was discovered or in the immediate aftermath.

Proctor was fired for inappropriate conduct in the Read case.

This comparison is both creative and unsettling accurate. The parallels between conflicted expert witnesses promoting weak evidence and the current AI hype in education are hard to ignore. Teachers unions partnering with tech giants to promote generative AI feels particularly problematic when these tools haven't been rigorously tested for educational eficacy. The lack of independent oversight combined with financial incentives creates exactly the kind of enviroment where students become guinea pigs for unproven technology.