Pedagogy, Thinking, And The First Draft Or What Teachers Should Consider About AI And Writing

“When it comes to writing, what matters is the writing.” - Author John Warner, February 1, 2024.

Generative AI is suggested as a writing aide and first draft generator but is this pedagogically sound? Let’s explore pedagogy, writing, and “AI.”

Author’s Notes:

This post relies on some experts from higher ed. With my K-12 background, I usually pull from K-12 experts. Writing is a big concern in higher ed. Who knew? Higher ed writing experts have put out so much about writing and pedagogy that I had to include it. Writing is writing. Their ideas apply to K-12 teachers.

When the quoted text is bold, I added it.

Post Outline:

The Purpose Of Teaching Writing

My favorite argument about the purpose of teaching writing comes from Professor of English and Comparative Literature at Rutgers University Dr. Lauren M.E. Goodlad and Professor of English at the University of Texas at Austin Dr. Samuel Baker:

“The purpose of teaching writing has never been to give students a grade but, rather, to help them engage the world’s plurality and communicate with others. That the same entrepreneurs marketing text generators for writing papers market the same systems for grading papers suggests a bizarre software-to-software relay, with hardly a human in the loop. Who would benefit from such “education”?”

Goodlad and Baker continue with a concern about problems with Large Language Models (LLMs) and how they are marketed:

“If one recurrent problem with LLMs is that they often “hallucinate” sources and make up facts, a more fundamental concern is that the marketers of these systems encourage students to regard their writing as task-specific transactions, performed to earn a grade and disconnected from communication or learning.”

Solidarity With Students Or Writing At Gunpoint

In my twelve years in the classroom, I noticed a problem with student writing: Students did not consent to writing assignments or want to do them. I call this “writing at gunpoint.”

Here is a hypothetical writing assignment scenario:

You, the reader, must complete a five-paragraph essay about a topic I deeply care about. Here is the prompt:

Mr. Mullaney contends that NBA officials cost the 1990s New York Knicks at least two NBA championships. Evaluate this claim by citing specific evidence. Construct an argument that Mr. Mullaney is correct or incorrect.

What if your essay does not meet my standards? What if I consider your essay a failure? Here are the consequences:

You will not receive your next pay raise or promotion.

You will be labeled a failure at your job.

I may call your parent or guardian in for a conference to discuss how poorly you performed.

When expected to do something you consider unimportant with consequences for failure, wouldn’t you turn to ChatGPT? So would I!

People “writing at gunpoint” turn to ChatGPT. How do we know? In academia, there is pressure to “publish or perish.” I blog when the spirit moves me, but professors don’t have that luxury.

In the last two years, the use of the word “delve” and the phrase “as of my last knowledge update,” both known to be regularly generated by ChatGPT, have skyrocketed in academic papers.

Neuroscientist and novelist Erik Hoel shared that other terms associated with AI-generated text have increased in peer reviews of scientific papers about AI in his New York Times piece, A.I.-Generated Garbage Is Polluting Our Culture.

If academics are turning to ChatGPT and not editing to get their papers written, imagine how tempting it must be for K-12 students.

So what is the solution?

I don’t have the answers, but I have an idea: Solidarity. In this case, solidarity with students, teachers, and those harmed by AI.

How can we be solidaric with students? Here are some suggestions:

Not every assessment is a test or essay.

Allow students to hand write, type, or use speech to text. As a former Special Education teacher, I ask that you not mandate handwritten essays or notes. That is not an inclusive practice.

Set aside in-class time for writing assignments instead of sending them home. That helps the teacher create a positive atmosphere for writing while avoiding homework’s many problems.

Give students choice when assessing or when asking them to write.

Allow narrative writing to be an option students have to show what they have learned. Students then consciously choose to write.

The Value Of Prewriting

Two things can be true: Not every assessment has to be a written piece, and there is academic value in having students write. Let’s start with prewriting.

Prewriting is valuable for students. Elementary educator Angela Griffith said prewriting helps students organize their thoughts, think about what they know, and generate ideas. High school English teacher Jackie, who blogs at Learning in Room 213, said about devoting extra time to prewriting, “I soon discovered that spending more time on the prewriting stage resulted in better writing on not only that assignment, but on other ones throughout the semester. That’s because writing is a thinking process as much as it is a writing one.”

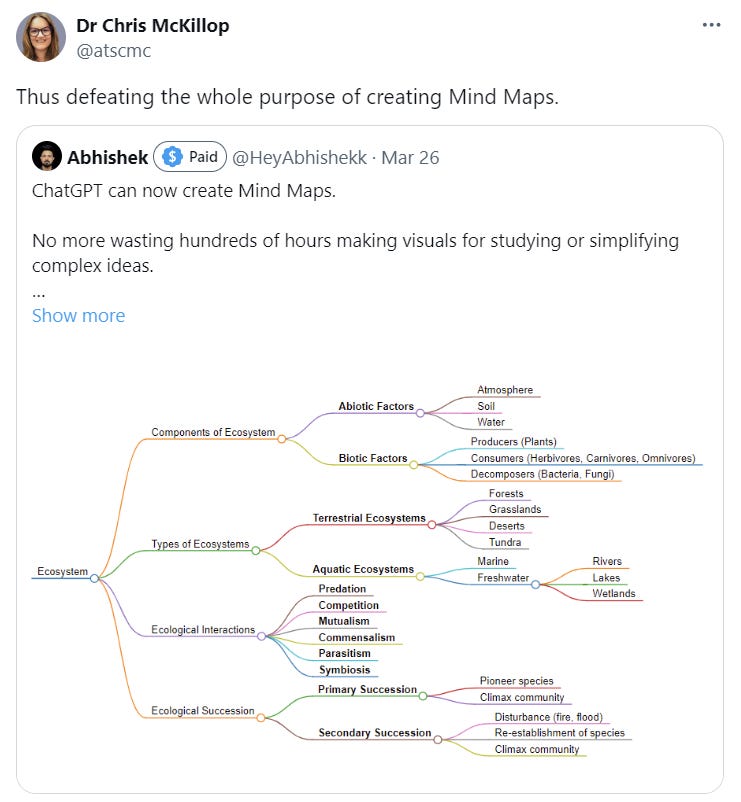

There is excitement about ChatGPT creating mind maps. However, as Dr. Chris McKillop commented, that defeats the purpose of creating mind maps.

For me, prewriting starts with a FigJam so I can see how the pieces fit together. Every FigJam section name becomes a heading in a Google Doc. That is my outline. I then refer to what I have in the FigJam in each section to write in the Google Doc.

This is the prewriting FigJam I created for my blog post, Pedagogy And The AI Guest Speaker Or What Teachers Should Know About The Eliza Effect.

This is the prewriting FigJam for this post.

First Drafts

“...When we are writing, the only thing that matters is the process.” - John Warner, February 1, 2024.

We know that first drafts need not be:

Written quickly.

Grammatically correct.

Yet, these are two things LLMs are good at! Are we solving a problem by having students generate first drafts with LLMs such as ChatGPT?

“We therefore caution educators to think twice before heeding the advice of enthusiastic technophiles. For example, the notion that college students learn to write by using chatbots to generate a synthetic first draft, which they afterwards revise, overlooks the fundamentals of a complex process. The capacity for revision requires hard-won habits of critical reflection and rhetorical skill. Since text generators do a good job with syntax, but suffer from simplistic, derivative, or inaccurate content, requiring students to work from this shallow foundation is hardly the best way to empower their thinking, hone their technique, or even help them develop a solid grasp of an LLM’s limitations,” argued Goodlad and Baker.

As Jackie of Learning in Room 213 said, writing is a thinking process. “Drafting is thinking. Revision is thinking. It's all thinking. The struggle is the point,” said Warner. He also said, “If the thing being written matters at all, the people who write their first drafts will outperform anyone who outsources it. Writing is thinking. You can’t outsource thinking to something that can’t think.” Warner’s comment about first drafts resonates, “If writing is thinking (as I believe it to be) the last thing you want to outsource to AI is the first draft because that's where the initial gathering of thoughts happens. It's why first drafts are hard. That difficulty signals their importance.”

“Writing is hard because the process of getting something onto the page helps us figure out what we think — about a topic, a problem or an idea. If we turn to AI to do the writing, we’re not going to be doing the thinking either,” said Jane Rosenzweig, Director of the Harvard Writing Center.

The Association for Writing Across the Curriculum said in response to synthetic text generators such as ChatGPT, “A fundamental tenet of Writing Across the Curriculum is that writing is a mode of learning. Students develop understanding and insights through the act of writing. Rather than writing simply being a matter of presenting existing information or furnishing products for the purpose of testing or grading, writing is a fundamental means to create deep learning and foster cognitive development.”

“For me…writing is an attempt to clarify what the world is like from where I stand in it. That definition of writing couldn’t be more different from the way AI produces language: by sucking up billions of words from the internet and spitting out an imitation,” said writer Vauhini Vara.

We conclude this discussion of first drafts with a couple of student thoughts:

“AI uses the existing ideas of others in order to generate a response. However, the response isn’t unique and doesn’t truly represent the idea the way you would. When you write, it causes you to think deeply about a topic and come up with an original idea. You uncover ideas which you wouldn’t have thought of previously and understand a topic for more than its face value. It creates a sense of clarity, in which you can generate your own viewpoint after looking at the different perspectives,” said Aditya, a student at Hinsdale High School in The New York Times.

“When we want students to learn how to think…assignments become essentially useless once AI gets involved,” said Owen Kichizo Terry, an undergraduate at Columbia University.

The Effectiveness of LLM Chatbots For Writing

There is doubt about Large Language Models’ ability to generate quality writing.

“...Simply abiding by the rules doesn’t make excellent writing — it makes conventional, unremarkable writing, the kind usually found in business reports, policy memos and research articles...It’s [ChatGPT’s] writing tends to carry a certain flatness. By design, the program relapses to a rhetorical median, its deviations mechanical whereas ours are organic,” said UCLA writing professor Laura Hartenberger.

“LLMs are good at generating text but not at generating novel ideas. This is, of course, an inherent feature of technology that's designed to generate plausible mathematical approximations of what you've asked it for based on its large corpus of training data; it doesn't think, and so the best you're ever going to get from it is some mashup of other peoples' thinking, said software engineer Molly White.

Emeritus Professor of Psychology and Neural Science at New York University Dr. Gary Marcus explained why generative AI produces mashups of other people's thinking:

Additionally, LLMs lack an authentic, interesting voice. “Self-confidence is a prerequisite for the active and thoughtfully engaged voice one finds in good writing. Generative AI, in contrast, is “boring.” It has no self-confidence because it has no self. It offers no flair, no spice, nothing that may cause your thoughts to churn the way another human’s words can,” said Vincent Carchidi, a non-resident scholar at the Middle East Institute.

Students have picked up on the poor quality of AI-generated writing. From the New York Times: “AI can only create with the information it already knows, which takes away the greatest quality writers have: creativity,” said Stella, a junior at Glenbard West High School. “...Some people still insist it’s the future for writing when in reality, AI will probably not come up with an original idea and only use possibly biased data to give to someone so they can just copy it and move on and undermine what it means to be a writer,” added John, a student at Glenbard North High School.

AI-generated writing’s poor reputation spurred Medium to announce it would, “...Revoke Partner Program enrollment from writers that publish AI-generated stories or other low-quality content that demonstrates clear misalignment with our mission,” because “As a membership-supported platform, our job is to make sure our readers are shown the best quality writing possible…” If Medium felt compelled to protect its brand by banning AI-generated writing from its partner program, other writing platforms could follow.

Substack has not banned paywalled AI content, though its co-founder wrote, “AI will never be able to replace the dynamic that is most central to Substack: human-to-human relationships…that’s why we are making Substack the place for trusted, valuable relationships between thinking, breathing, feeling people.”

Lastly, when writing non-fiction, accuracy is non-negotiable. This is what AI expert Dr. Emily Bender said about LLMs and accuracy:

Assessment

The availability of Large Language Models means teachers need to rethink assessment. As John Warner said, “We have to design learning opportunities that are not solved by automation. It's doable, but it requires rethinking around both the things we assign and how we assess them. It's an opportunity to move away from a bad status quo.”

Keeping with the idea of solidarity with students, a Professor of Practice at the Center for Learning & Teaching at the American University in Cairo, Dr. Maha Bali asked, “What if we create a culture of transparent assessment” and “What if we took a “disclosure of learning process” approach rather than prevent and punish approach?” To me, this means emphasizing process over product. Engage students in prewriting and reflection. Emphasize those over the finished products so students feel less like they are writing at gunpoint.

There are ideas about assessment tasks that ChatGPT cannot do. Cañada College English Professor Anna Mills and Dr. Goodlad documented several tasks ChatGPT struggles to perform reliably in their post about adapting writing for the age of Large Language Models. Alyson Klein shared eight tips for creating assignments ChatGPT cannot do in Education Week.

AI Detectors Do Not Work

Please do not subject students to AI detectors. That positions teachers for conflict with students and families based on faulty “evidence.” For example, AI detectors claimed the US Constitution was written by AI. AI detectors showed bias against non-native English writers. Even Turnitin’s research claims its AI detector is correct just 79% of the time.

If that evidence does not sway you, please consider what one of the best AI experts, Dr. Timnit Gebru, says about AI detectors:

Key Takeaways

After studying generative AI and writing, here are my key takeaways:

Writing is thinking.

Prewriting is valuable.

There is pedagogical value in first drafts. Think long and hard before turning them over to ChatGPT.

Consider assessments that are not ideal for ChatGPT, written or otherwise.

Consider how your assessments are solidaric with students.

Value the authenticity, originality, and creativity of writing.

If a piece of writing means anything to you, be wary of using generative AI. Use Grammarly as a grammar aid and use your authentic voice. Or as Sara Bareilles said,

Continuing The Conversation

What do you think? What do you think about AI and writing? How are you adjusting writing instruction to account for generative AI? Comment below or Tweet me at @TomEMullaney.

Does your school or conference need a tech-forward educator who critically examines AI and pedagogy? Reach out on Twitter or email mistermullaney@gmail.com.

Post Image: The blog post image is a mashup of two images. The background photo is Woman in White Long Sleeved Shirt Holding a Pen Writing on a Paper by energetic.com on Pexels. The robot is Thinking Robot bu iLexx from Getty Images.

AI Disclosure:

I wrote this post without the use of any generative AI. That means:

I developed the idea for the post without using generative AI.

I wrote an outline for this post without the assistance of generative AI.

I wrote the post from the outline without the use of generative AI.

I edited this post without the assistance of any generative AI. I used Grammarly to assist in editing the post. I have Grammarly GO turned off.

I wrote a draft of this post in Google Docs. I did not use Google Gemini.

Try running the draft through Claude. Ask for an assessment of its coherence. I would be interested in hearing the results of this experiment. Claude won’t do anything to it or with it. Also I’m curious about how you determine an “AI expert.”